You’ve somewhat got an idea of one of the potential next steps for this technology. The “ai” we have doesn’t have enough input data or alternatively doesn’t have the hard coded approaches like what you mentioned about giving them a sort of blueprint for anatomy, so they end up doing well on simpler body parts like arms, legs, torso which look similar from different perspectives relative to hands or feet(think about the amount of different overlaps between digits and how fingers could be hidden). Of course the issue with any sort of “blueprint” is making them abstract enough to be able to be used across different art styles and creatures(probably very far off for the latter for obvious reasons). Which loops back to the reason an ai was made(first/successfully) for digital art instead of a hard coded approach, it’s a lot easier to slap a bunch of labels onto pictures and data(the math+code wasn’t TOO hard in the grand scheme of things).

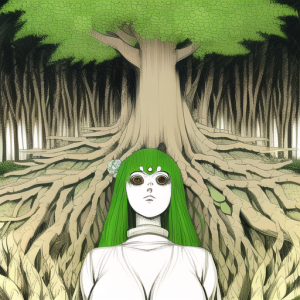

Edit: Alien abduction and replacement confirmed, though it seems that the aliens forgot that nene isn't a double H or whatever those things are, orrrr that humans only have 5 toes on each foot...

This reminds me of how some of the more advanced 2D sprites were made during the awkward transition between 2D and 3D gaming. Some companies made 3D models of all the characters, then just took a bunch of stills of them in motion and turned those into sprites. Feels like that kind of mindset and methodology would be a logical progression here, too. Create a basic wireframe 3D body model with all the right features and portions and then let the A.I puppet it into a desired position, then paint a 2D image over the top, using the camera perspective as a reference point.

![1girl, facing viewer, blue eyes, [grey eyes], white hair, medium hair, hair bow, s-904332640 (1).png 1girl, facing viewer, blue eyes, [grey eyes], white hair, medium hair, hair bow, s-904332640 (1).png](https://thevirtualasylum.com/data/attachments/6/6747-48f1f38ce9575675a608b23f0ce9d666.jpg)